Insights from Zee Palm's Team

We talk about products, app development, and next-generation apps.

Blockchain in Public Sector Software: Use Cases

Blockchain is transforming how government agencies manage data and deliver services. Its decentralized structure, tamper-proof records, and automated processes address longstanding challenges like data security, fraud, and inefficiency. Unlike older systems that rely on centralized databases vulnerable to breaches, blockchain distributes data across multiple nodes, enhancing security and transparency.

Key benefits include:

- Transparency: Publicly verifiable records improve accountability.

- Data Security: Distributed networks reduce hacking risks.

- Fraud Prevention: Immutable ledgers and smart contracts minimize manipulation.

- Efficiency: Automation reduces manual work and speeds up processes.

However, challenges like regulatory uncertainty, integration with existing systems, and high initial costs require careful planning and expertise. Pilot programs and collaborations with experienced developers can help agencies navigate these hurdles.

Blockchain is already being tested in areas like digital identity, voting, and tax collection, with states like Colorado and Wyoming leading the way. Agencies adopting this technology now will be better equipped to meet future demands for secure, efficient public services.

Top 10 Government Blockchain Use Cases

1. Blockchain Technology in U.S. Public Sector Software

Blockchain is reshaping how U.S. government agencies manage data and deliver services to citizens. By 2018, 18 U.S. states had introduced blockchain-related legislation, with 9 bills successfully passed into law. This growing interest highlights how blockchain's features can tackle persistent challenges in public administration, particularly in areas like transparency, security, and efficiency.

Transparency

One of blockchain's standout features is its ability to create a tamper-proof, publicly verifiable record - a level of transparency that traditional databases simply can't achieve.

Take the Colorado Department of State as an example. Back in 2018, Colorado initiated efforts to explore blockchain for encryption and data integrity in government records. This move was aimed at not only securing public records but also ensuring citizens could independently verify the authenticity of government data.

Such initiatives help foster trust between citizens and government agencies. Whether it's procurement decisions, voting records, or benefit distributions, blockchain ensures that citizens can verify these processes on their own, reinforcing accountability.

Data Security

Traditional systems often rely on centralized databases, making them vulnerable to breaches. Blockchain, on the other hand, distributes data across multiple nodes, significantly reducing the risk of unauthorized access.

With its cryptographic safeguards, blockchain ensures that sensitive information - like health records - remains accessible only to authorized parties. This reduces the chances of data breaches while maintaining privacy. Additionally, blockchain's sequential linking of encrypted data ensures that information cannot be altered without detection.

For government agencies managing sensitive citizen data, such as Social Security numbers or tax records, blockchain's "security-by-design" approach offers a robust solution to protect against unauthorized access and tampering.

Fraud Prevention

Blockchain's immutable ledger ensures that every transaction is permanently recorded, leaving no room for manipulation or erasure.

Smart contracts, another key feature, automate processes like compliance checks and benefit distributions. These self-executing agreements reduce human error and opportunities for corruption. For example, when eligibility criteria are embedded into a smart contract, payments are only released when all conditions are met, eliminating bias or manipulation.

Moreover, blockchain's audit trail makes it easier for government auditors to trace financial transactions and decisions, providing a clear path to identify and address any suspicious activity.

Operational Efficiency

Blockchain also has the potential to streamline government workflows. By automating approvals and generating real-time reports, the technology can reduce bottlenecks and errors. Similar success has been seen in municipal projects abroad, and U.S. agencies could achieve comparable results in areas like land registry management, business licensing, and payroll tax collection.

For example, blockchain-based systems can execute these processes faster and with greater accuracy, saving time and resources. Combined with its enhanced security features, blockchain offers a compelling case for modernizing government operations.

However, implementing blockchain in government settings isn't without its challenges. The complexity of integrating blockchain with existing systems and ensuring compliance with regulations requires specialized expertise. This is where partnerships with experienced developers, such as Zee Palm (https://zeepalm.com), can make a difference. Their proven expertise in blockchain development helps ensure secure, scalable solutions tailored to government needs.

2. Traditional Public Sector Software Solutions

Before blockchain technology entered the scene, U.S. government agencies relied heavily on legacy software to manage tasks like citizen records and tax collection. These systems, built on centralized databases, handle millions of public records each year. However, as digital demands grow, these older systems struggle to keep up. This creates challenges in areas like transparency, security, fraud prevention, and operational efficiency.

Transparency

Traditional systems often fall short when it comes to transparency. Unlike blockchain's tamper-resistant records, legacy systems are plagued by fragmented data silos and delayed information sharing. Citizens frequently encounter disconnected systems that prevent real-time data exchange between agencies. For example, tracking government spending or contract details can be a frustrating process. Delayed disclosures, bureaucratic red tape, and incomplete audit trails make it hard for the public to follow how decisions are made or funds are allocated. Instead of having access to real-time updates, citizens are left relying on periodic reports.

Data Security

Transparency isn’t the only issue - data security is another major weakness of traditional systems. These systems use standard security tools like firewalls, encryption, and access controls to safeguard sensitive information. However, their centralized design creates a single point of failure, which makes them vulnerable to cyberattacks. Each year, the U.S. public sector reports numerous data breaches. These breaches are often linked to outdated software, poor patch management, or stolen credentials. Budget limitations frequently delay critical updates, leaving many agencies stuck with infrastructure that can’t support modern security measures.

Fraud Prevention

Fraud prevention is another area where traditional systems show their limitations. These systems rely on internal controls, segregation of duties, audits, and compliance checks to catch fraudulent activity. Unfortunately, these measures are often reactive, identifying fraud only after it has occurred. Manual processes in these older systems are particularly susceptible to manipulation. For instance, welfare and benefits programs have long struggled with fraudulent claims, partly because they lack integrated, real-time verification tools. Without the ability to cross-check data across agencies, individuals can sometimes receive duplicate benefits from multiple programs.

Operational Efficiency

Traditional systems aim to boost efficiency with tools like workflow automation, electronic document management, and centralized case management platforms. Despite these efforts, inefficiencies persist due to outdated infrastructure and siloed databases. Routine processes - such as issuing business licenses or managing land titles - often require interactions with multiple agencies and involve redundant paperwork. Citizens are frequently asked to provide the same information to different departments, while government employees waste time on repetitive data entry. Because these systems lack interoperability, agencies must rely on expensive custom solutions or manual data transfers to bridge the gaps.

sbb-itb-8abf120

Pros and Cons

When comparing blockchain-based public sector software with traditional systems, the differences are striking. Each approach brings its own set of strengths and challenges that government agencies must carefully evaluate. Below is a breakdown of how these two systems stack up across key criteria:

CriteriaBlockchain-Based SystemsConventional SystemsTransparencyImmutable, auditable records create a full transaction history, making tracking straightforward and reliableIsolated databases and manual record-keeping make it harder to trace data and often delay auditsData SecurityDecentralized networks with cryptographic security reduce vulnerabilities and improve resistance to cyberattacksCentralized systems are more prone to hacking and other disruptionsFraud PreventionTamper-resistant ledgers and automated smart contracts reduce the risk of manipulation and fraudReliance on manual processes opens doors to errors, fraud, and duplicate claimsOperational EfficiencyAutomating tasks like payments and compliance through smart contracts minimizes manual work and speeds up servicesManual workflows and redundant paperwork slow down processes and increase labor demandsImplementation CostsHigh upfront investment can lead to cost savings over time through automation and efficiencyLower initial costs are often outweighed by ongoing administrative expensesScalabilityRequires advanced infrastructure and careful planning to scale for large government applicationsHandles large transaction volumes but may struggle to meet modern digital needsRegulatory FrameworkLegal and compliance requirements for blockchain remain uncertain as regulations continue to developEstablished guidelines and compliance procedures are already in place

Real-world examples highlight these differences. For instance, the Groningen Stadjerspas system and the Emmen Energy Wallet showcased how blockchain enables secure, anonymous participation and rapid citizen engagement. These projects underline blockchain's potential to transform public services while reinforcing the challenges outlined above.

However, adopting blockchain isn't without hurdles. Integration with existing systems, unclear regulatory frameworks, and resistance from staff accustomed to traditional workflows can complicate implementation. Expert guidance is often essential to navigate these complexities effectively.

Scalability is another sticking point. Traditional systems can handle high transaction volumes but often compromise on efficiency and user experience. Blockchain networks, on the other hand, must carefully balance security, decentralization, and performance - an increasingly difficult task as applications grow.

Addressing these challenges calls for a strategic approach. Pilot programs and collaborations with experienced developers, such as those at Zee Palm, can help tackle technical obstacles, ensure compliance, and pave the way for successful implementation.

Conclusion

Blockchain is paving the way for a new era in public services, offering unmatched transparency, security, and efficiency. While traditional systems have served well in the past, they often fall short of meeting today’s demand for accountability and streamlined governance. Blockchain, on the other hand, aligns with these modern expectations, presenting a practical path forward.

The growing support for blockchain across U.S. states reflects its potential, and international success stories, like municipal projects in the Netherlands that serve thousands, showcase its effectiveness in real-world applications. These examples highlight not only the promise of blockchain but also the importance of strategic, well-planned implementation.

Of course, challenges remain. Regulatory uncertainty and the complexity of integrating legacy systems are significant hurdles. To address these, government agencies should start with pilot projects that demonstrate tangible value. Engaging stakeholders early, designing thoughtful pilot programs, and partnering with experienced developers are crucial steps. Collaborating with experts like Zee Palm (https://zeepalm.com), known for their deep experience in blockchain and public sector solutions, can provide the guidance needed to navigate these complexities.

Looking ahead, blockchain's role in modernizing U.S. public sector software is undeniable. Its ability to automate processes with smart contracts, create tamper-proof audit trails, and build citizen trust makes it an essential tool for the future of government operations. Agencies exploring blockchain applications in areas like identity management, benefit distribution, and record keeping today will be best positioned to lead tomorrow.

The evolution of public sector software through blockchain isn’t just a possibility - it’s an unfolding reality. Those who act now with careful planning, expert collaboration, and a focus on citizen needs will set the standard for transparent, secure, and efficient public services.

FAQs

How does blockchain improve transparency and trust in government services?

Blockchain brings a new level of transparency and trust to government services by using a decentralized and tamper-resistant ledger to record transactions and data. This system ensures that records can't be altered or manipulated, boosting accountability while curbing corruption.

When applied to areas like voting systems, land registries, and supply chain tracking, blockchain technology simplifies processes and provides citizens with greater visibility into government operations. At Zee Palm, we develop Web3 and blockchain solutions designed specifically for these purposes, enabling governments to implement secure and trustworthy systems.

What challenges might government agencies encounter when implementing blockchain in their systems?

Government agencies often encounter a range of obstacles when trying to incorporate blockchain into their existing systems. A major sticking point is compatibility with legacy systems. Many public sector platforms were developed long before blockchain was even a consideration, which can create significant technical and operational challenges during the integration process.

Another hurdle is maintaining data privacy and security compliance. While blockchain is known for its secure structure, government agencies must still navigate intricate regulations to ensure sensitive information is handled correctly. On top of that, high implementation costs and the demand for specialized expertise can make adoption difficult, especially for agencies operating with tight budgets or limited technical resources.

Even with these challenges, blockchain has the potential to revolutionize public sector operations by improving transparency, bolstering security, and increasing efficiency - provided it’s implemented with careful planning and strategy.

What are some key public sector applications of blockchain, and how has it improved transparency and security?

Blockchain technology has found its way into various public sector applications, offering improvements in transparency, security, and operational efficiency. Take voting systems, for instance - blockchain has been used to create secure, tamper-resistant election processes, helping to build trust in democratic practices. Similarly, in land registry management, blockchain provides a way to establish unchangeable records of property ownership, cutting down on fraud and ownership disputes.

Another area where blockchain shines is public finance and procurement. By enabling transparent tracking of transactions and contracts, it reduces opportunities for corruption and ensures greater accountability. These examples highlight how blockchain can reshape public sector functions, offering a reliable way to protect sensitive information and promote trust.

Related Blog Posts

RESTful API Design for Enterprise Integration

RESTful APIs are critical for modern businesses to connect software, databases, and services. They enable scalable, secure, and efficient communication across systems, making them essential for industries like finance, healthcare, manufacturing, and e-commerce. For example, a U.S. financial company processes 360 billion API calls monthly, and Netflix uses RESTful APIs to support millions of users through microservices.

Key Takeaways:

- Scalability & Performance: RESTful APIs handle high traffic with stateless communication and lightweight JSON payloads.

- Industry Use Cases:

- Healthcare: Real-time patient data exchange enhances telehealth.

- Manufacturing: IoT integration improves monitoring and predictive maintenance.

- E-commerce: APIs power omnichannel shopping experiences, like eBay's billion-plus listings.

- Design Principles:

- Use clear, logical URIs (

/users/123/orders). - Secure APIs with OAuth 2.0, HTTPS, and role-based access.

- Plan for versioning (

/v1/orders) to ensure smooth updates.

- Use clear, logical URIs (

- Legacy Systems: RESTful APIs outperform older methods like SOAP, offering faster performance, simpler implementation, and lower maintenance costs.

RESTful APIs are reshaping industries by providing reliable, scalable, and secure solutions for enterprise integration. Businesses transitioning from legacy systems to RESTful APIs report reduced costs, faster response times, and improved operational efficiency.

Kickstart Your MuleSoft Journey: What is REST API & Its Role in Digital Transformation | Part 3

Core Design Principles for Enterprise RESTful APIs

Building enterprise-grade RESTful APIs requires more than just basic functionality. These APIs must handle heavy traffic, safeguard sensitive information, and remain dependable under demanding conditions. The architecture of an enterprise API sets it apart from simpler implementations. Let’s dive into the essential design principles that form the backbone of a robust enterprise API.

Resource Modeling and URI Design

Effective resource modeling lays the foundation for maintainable APIs. The structure of URIs should reflect real-world business relationships in a way that’s intuitive for developers. Instead of using ambiguous endpoints like /data/fetch/user123, opt for logical hierarchies such as /users/123/orders or /projects/{id}/tasks/{taskId}/documents.

For example, a consulting and engineering company successfully streamlined its operations by centralizing project data from two separate systems. They designed resources around actual business processes, using URIs like /projects/{id}/tasks/{taskId}/documents. This approach improved data accuracy and simplified workflows across teams.

To keep things consistent, use plural nouns for collections (e.g., /orders) and apply uniform naming conventions throughout the API. A manufacturing ERP system, for instance, uses /inventory/items to represent product data, making it easier for developers to integrate and work with the API.

However, avoid creating overly complex, deeply nested URIs. While something like /users/123/orders/456/items/789/reviews might seem logical, it quickly becomes cumbersome. A flatter structure, such as /reviews/789, paired with query parameters to filter by user or order, can achieve the same functionality while maintaining clarity.

Security and Scalability Implementation

Security is non-negotiable in enterprise environments. Start with multi-layered protection: OAuth 2.0 for authentication, HTTPS to encrypt communication, and role-based access controls tailored to your organizational structure.

A great example of security in action is Mastercard's Tokenization API. It adheres to PCI-DSS standards for secure payment processing, reducing fraud risks while supporting millions of secure transactions worldwide. By embedding security from the outset, this API ensures trust and reliability at scale.

To handle high traffic, implement throttling based on user tiers and design for horizontal scaling. Intelligent caching can further boost performance. RESTful APIs, for example, can handle 2.5 times more concurrent users than legacy systems while delivering response times up to 50% faster.

Netflix’s microservices architecture is a prime example of scalability in action. Built on RESTful APIs, it supports over 200 million subscribers with fault tolerance and rapid scaling. This design ensures minimal downtime during peak traffic events, enabling efficient troubleshooting and seamless scalability.

API Versioning and Documentation

Clear versioning is crucial to avoid integration headaches. URI versioning, like /v1/orders or /v2/users, helps communicate changes and updates clearly. Many enterprises manage multiple API versions simultaneously during migrations, using transformation layers to support older clients while introducing new features.

It’s equally important to establish deprecation policies. Provide advance notice and detailed migration guides to help clients transition smoothly when retiring older API versions. This transparency builds trust with partners who rely on your APIs for critical business functions.

Good documentation is the key to adoption and reducing support overhead. Tools like Swagger or OpenAPI make it easier for developers to onboard and minimize errors during integration. Companies like eBay and Twitter have shown how well-documented APIs can drive ecosystem growth and encourage broader adoption.

Comprehensive documentation should cover endpoint details, request and response examples, authentication methods, error codes, and real-world use cases. Go beyond listing capabilities - illustrate practical integration scenarios and provide troubleshooting tips for common challenges developers might face.

Finally, real-time monitoring and error logging are essential for long-term success. Track metrics like response times, error rates, and usage patterns to identify areas for improvement. This data helps you make informed decisions about scaling, caching, and feature enhancements.

Case Studies: RESTful API Integration in Enterprises

Real-world examples show how RESTful APIs can reshape enterprise operations across various industries. These case studies highlight measurable improvements achieved through effective API integration.

Healthcare: Patient Data Integration Systems

In 2023, a major hospital network faced a critical issue: its telehealth platform couldn't access real-time electronic health records (EHR), leading to delays and tedious manual cross-referencing. By integrating RESTful APIs, the hospital established secure, real-time data exchange between the telehealth platform and its existing EHR system, all while adhering to HIPAA regulations.

This change revolutionized patient care. Medical professionals could now access comprehensive patient histories, lab results, and medication records directly through the telehealth interface. Patients no longer had to repeat their medical details during virtual consultations, and administrative tasks like appointment scheduling and prescription management became far more streamlined.

Retail: Omnichannel Transaction Management

A national retail chain struggled with mismatched inventory data between its online store and physical locations. Customers frequently encountered out-of-stock messages, and store associates lacked up-to-date inventory information.

To fix this, the retailer deployed RESTful APIs to synchronize inventory and customer data across its e-commerce platform, point-of-sale systems, warehouse software, and mobile apps. The results were impressive: mobile load times improved by 38%, cart abandonment rates dropped by 17%, and cross-channel transactions increased by 23%. These upgrades created a seamless shopping experience, allowing customers to reserve items online for in-store pickup or easily return purchases at physical locations.

Manufacturing: IoT and ERP System Integration

A North American manufacturer faced inefficiencies in equipment monitoring due to its outdated SOAP-based ERP system, which couldn’t effectively communicate with modern IoT sensors. Over 18 months, the company transitioned from SOAP to RESTful APIs, running both systems simultaneously with transformation layers to convert XML to JSON.

This migration delivered tangible results: API response times dropped by 40%, annual savings reached $450,000, and a new mobile inventory management app was launched, giving plant managers and technicians real-time access to production data and inventory levels. Additionally, production equipment now automatically transmits performance metrics, temperature readings, and maintenance alerts. By leveraging machine learning, the company can predict equipment failures before they happen, reducing downtime and boosting efficiency.

These examples underscore how strategic API integration can enhance operations and deliver measurable benefits across industries.

sbb-itb-8abf120

RESTful APIs vs Legacy Integration Methods

Enterprises are increasingly moving away from legacy systems in favor of RESTful APIs to achieve better performance and scalability. Understanding the distinctions between these approaches is essential for successful system integration and modernization.

RESTful APIs vs SOAP: Key Differences

When comparing RESTful APIs and SOAP, the differences in performance, data handling, and developer experience stand out. RESTful APIs rely on lightweight JSON payloads, which are 30-70% smaller than SOAP's XML format. This results in up to 70% faster performance and the ability to handle 2.5 times more requests than SOAP. These advantages, combined with a stateless architecture, translate into measurable performance improvements that organizations can leverage.

FeatureRESTful APIsSOAP (Legacy)Payload FormatJSON (lightweight)XML (verbose)PerformanceHigh, stateless, scalableSlower, stateful, less scalableDeveloper ExperienceEasier, widely adoptedComplex, steeper learning curveFlexibilitySuited for microservicesMonolithic, rigidMaintenanceLower overheadHigher maintenance costsAdoptionModern, cloud-nativeLegacy, on-premises

For developers, RESTful APIs are far more approachable. They use standard HTTP methods like GET, POST, PUT, and DELETE, simplifying implementation and reducing the learning curve. RESTful APIs also require less boilerplate code and provide straightforward documentation, making integration and onboarding much faster. SOAP, on the other hand, involves strict XML schemas and the use of complex WSDL files, which increase development effort and require specialized expertise.

The benefits of RESTful APIs are evident in real-world applications. For instance, a large U.S.-based financial services company transitioned to a RESTful API-driven architecture and now manages an astounding 360 billion API calls per month (roughly 12 billion per day, with peaks of 2 million calls per second). This demonstrates the scalability and efficiency that modern APIs can achieve.

These advantages highlight why RESTful APIs are a powerful solution for addressing the limitations of legacy systems.

Legacy System Challenges

Legacy integration systems often fall short of meeting the needs of today's enterprises. These older systems are associated with high maintenance costs, slower performance, and limited scalability. Compatibility issues frequently arise when attempting to connect legacy systems with modern platforms, leading to increased technical debt and making it harder to support new business initiatives or digital transformation efforts.

Security and compliance also pose significant challenges. Legacy systems often rely on outdated protocols, making it difficult to implement modern security measures and leaving organizations vulnerable to cyber threats. Additionally, these systems struggle to adapt to changing regulatory requirements, further complicating compliance efforts. Their rigid design limits flexibility, making it challenging for businesses to respond quickly to evolving market demands or customer needs.

The financial strain of maintaining legacy systems is well-documented. For example, a North American manufacturing company saved $450,000 annually after migrating from SOAP to RESTful APIs, while also achieving a 40% improvement in API response times. These savings stem from reduced infrastructure costs, lower maintenance requirements, and better operational efficiency.

RESTful APIs offer a modern alternative that aligns with current enterprise needs, including mobile applications, IoT integration, and cloud-native systems. Their lightweight, stateless design and reliance on standard web protocols make them especially well-suited for mobile and IoT use cases, which demand efficient, scalable, and real-time data exchange. RESTful APIs also integrate seamlessly with SaaS platforms and distributed systems, making them a cornerstone of digital transformation initiatives.

Migrating from legacy systems to RESTful APIs requires thoughtful planning but delivers clear benefits. Many enterprises adopt a phased approach, running SOAP and REST endpoints concurrently during the transition. Transformation layers are used to convert between XML and JSON formats, ensuring minimal disruption while enabling a gradual shift to modern API architecture. This strategy allows organizations to modernize their systems efficiently while maintaining continuity.

Best Practices from Enterprise API Projects

Enterprise API projects consistently demonstrate that smooth migrations, real-time performance tracking, and strong collaboration with stakeholders are key to achieving success. Companies that adopt these strategies often avoid costly mistakes and deliver better results.

Enterprise API Migration Strategies

A phased rollout with dual endpoint support is one of the most effective ways to manage API migrations. This approach allows businesses to introduce new RESTful endpoints while keeping legacy systems fully operational, minimizing disruptions during the transition.

For example, a major US financial services company successfully consolidated three API management platforms into a single open-source gateway. By employing live configuration switching, they processed 360 billion API calls monthly without any downtime during upgrades. This effort, led by their DevOps and engineering teams, significantly reduced operational complexity and improved scalability.

Transformation layers also play a critical role in API migrations, especially when bridging the gap between legacy systems and modern platforms. These layers can handle tasks like XML-to-JSON data conversions, which streamline communication between systems. A North American manufacturing company used transformation layers to achieve a 40% improvement in response times and save $450,000 annually.

Another best practice is prioritizing high-impact APIs during migration. By focusing on customer-facing APIs and high-volume transactions first, businesses can ensure critical operations remain uninterrupted. Internal systems can then be migrated gradually, reducing risks to revenue-generating activities. This approach requires meticulous planning and thorough testing to protect core business functions.

Backward compatibility is another essential consideration. Maintaining support for existing integrations while introducing new capabilities allows internal teams and partners to migrate at their own pace. Additionally, having clear rollback procedures in place ensures that any issues can be quickly addressed without jeopardizing the system.

Even after a migration is complete, continuous monitoring and optimization are necessary to maintain top-notch API performance.

API Monitoring and Performance Optimization

Real-time analytics and automated error detection are indispensable for keeping enterprise APIs running smoothly at scale. For companies processing billions of API calls each month, monitoring systems that track response times, error rates, throughput, and uptime are critical.

Enterprises that invest in robust monitoring often experience up to a 30% reduction in downtime and a 25% boost in performance. These gains come from the ability to identify and address issues proactively, preventing minor problems from escalating into major outages.

Custom alerts and detailed error logging further enhance performance management. For instance, one company monitoring RESTful APIs for IoT devices reduced latency by 50% and cut data overhead by 45%.

Ongoing performance optimization involves closely watching key metrics. Response times can reveal slow endpoints, while throughput analysis uncovers capacity bottlenecks. Tracking error rates helps pinpoint integration issues or system failures that need immediate attention.

Automated tuning tools and regular performance reviews ensure that APIs continue to meet growing demands. Load testing and capacity planning are especially important for organizations expecting to support millions of users or handle spikes in traffic during peak periods.

Working with Stakeholders on API Projects

Beyond the technical aspects, aligning with stakeholders is essential for sustained API success. Open communication and early involvement help prevent costly rework and ensure the project aligns with business priorities. Many successful companies create collaborative workshops and feedback loops to keep technical teams connected to business needs throughout the development cycle.

Cross-functional collaboration between IT and business stakeholders is critical. When technical teams have a clear understanding of real-world business requirements, they can build APIs that address practical challenges rather than hypothetical ones. This alignment is crucial in complex enterprise environments where multiple departments rely on interconnected systems.

Transparent documentation and regular updates also play a big role in stakeholder engagement. Companies that maintain detailed API documentation and consistent versioning practices find it easier to onboard new partners and manage future integrations. As API programs grow and new endpoints are added, this documentation becomes even more valuable.

Involving stakeholders early in the planning phase helps identify potential issues that technical teams might miss. Business stakeholders often have a deep understanding of data flows and integration points, making their input invaluable for ensuring APIs support complete business processes.

Regular feedback sessions with stakeholders help catch misalignments before they become bigger problems. These touchpoints allow teams to adjust when business needs shift or initial assumptions prove incorrect. Companies that adopt this approach often report higher adoption rates and fewer post-launch changes.

Zee Palm's RESTful API Development Services

With over a decade of experience in enterprise RESTful API development, Zee Palm has successfully completed more than 100 projects, supported by its 13-member team of specialists. They craft secure, scalable API solutions designed to meet the complex demands of modern enterprises, helping businesses navigate digital transformation with confidence. Their expertise is rooted in creating APIs that streamline enterprise integration, ensuring seamless connectivity across systems.

Zee Palm's approach is all about customization. Each API is tailored to the unique needs of the client, leveraging best practices like modular architecture, consistent versioning, and advanced security protocols. This ensures APIs deliver top-tier performance while remaining adaptable to evolving business requirements.

Zee Palm's Enterprise Integration Expertise

Zee Palm prioritizes both security and scalability in its API designs. By implementing cutting-edge measures like OAuth 2.0 authentication, HTTPS encryption, and fine-grained access controls, they ensure API endpoints remain secure. At the same time, they design stateless APIs, use rate limiting, and deploy API gateways to manage traffic efficiently and maintain high availability.

This meticulous approach reduces integration time by up to 60% and cuts costs significantly. The team addresses challenges like legacy system compatibility, data consistency, and performance bottlenecks through phased rollouts, transformation layers (e.g., XML-to-JSON), and parallel endpoint operations.

Long-term usability is another cornerstone of Zee Palm's strategy. They emphasize clear documentation, modular architecture, and consistent versioning to ensure APIs can evolve alongside the client’s business. Ongoing monitoring and performance optimization further support the seamless integration of APIs into growing and changing technological ecosystems.

Industries Served by Zee Palm

Zee Palm’s API solutions cater to a wide range of industries, including healthcare, manufacturing, EdTech, blockchain/Web3, and IoT. Their expertise in these sectors allows them to develop tailored solutions that address specific industry challenges.

In healthcare, Zee Palm has developed APIs that enable real-time patient data exchange and improved care coordination. These solutions comply with HIPAA regulations and support interoperability standards, ensuring secure and efficient handling of sensitive medical data.

For EdTech, Zee Palm has created APIs for e-learning platforms and learning management systems. These tools ensure smooth content delivery, effective user management, and scalability to accommodate large student populations.

In the manufacturing sector, their APIs integrate seamlessly with ERP and IoT systems, enabling real-time data exchange and process automation. Manufacturers benefit from enhanced operational visibility, inventory tracking, and workflow optimization.

Zee Palm’s work in blockchain and Web3 includes APIs that facilitate secure transaction management and decentralized data handling. These solutions meet the unique demands of blockchain applications while maintaining enterprise-grade reliability and performance.

Why Choose Zee Palm for API Development

Zee Palm’s portfolio includes more than 70 satisfied clients, with each project rooted in a deep understanding of industry-specific needs. Their API solutions are designed to align with business goals, delivering measurable benefits such as improved data accuracy, enhanced mobile app performance, streamlined workflows, and increased cross-channel transactions.

The company’s experience spans diverse fields like AI, SaaS, healthcare, EdTech, Web3, and IoT, giving them the expertise to tackle even the most complex enterprise integration challenges. By applying insights gained from past projects, Zee Palm reduces development risks and accelerates deployment timelines, ensuring RESTful APIs play a pivotal role in modern enterprise systems.

FAQs

How do RESTful APIs improve the scalability and performance of enterprise systems compared to older integration methods?

RESTful APIs bring a boost to scalability and performance for enterprise systems by relying on lightweight, stateless communication. Instead of outdated methods, they use standard HTTP protocols, which makes it simpler to scale operations across distributed systems. This approach helps ensure quicker response times and better use of resources.

Another advantage lies in their modular design. Enterprises can add new services or features without needing to revamp the whole system. With support for real-time data exchange and the ability to handle heavy traffic, RESTful APIs offer the adaptability and efficiency that modern businesses depend on to keep up with growth and change.

What are the essential security practices for designing a RESTful API in enterprise applications?

When building a RESTful API for enterprise applications, security should be a top priority to safeguard sensitive information and keep systems running smoothly. Here are some essential practices to keep in mind:

- Authentication and Authorization: Implement robust methods like OAuth 2.0 to ensure only verified users or systems can access the API.

- Data Encryption: Use HTTPS to encrypt data during transmission, and consider encrypting sensitive information stored on servers to add an extra layer of protection.

- Rate Limiting: Set limits on the number of requests a single client can make to prevent abuse and minimize the risk of denial-of-service (DoS) attacks.

- Input Validation: Reduce vulnerabilities like injection attacks by thoroughly validating and sanitizing all incoming data.

Focusing on these strategies helps enterprises create APIs that are secure, dependable, and ready to integrate seamlessly with other systems.

What are the best practices for managing API versioning to ensure smooth enterprise integration and minimal disruption during updates?

To manage API versioning effectively in enterprise settings, it’s crucial to implement strategies that maintain compatibility and reduce downtime. API versioning helps developers and systems handle updates without disrupting existing integrations.

Here are some key practices to consider:

- Use clear version identifiers: Make versioning straightforward by including version numbers in the API URL (e.g.,

/v1/). This approach ensures developers can easily identify and work with the appropriate version. - Maintain backward compatibility: Whenever possible, avoid introducing breaking changes. Instead, phase out outdated features gradually, providing enough time for users to adapt before removing them.

- Communicate changes clearly and early: Offer detailed release notes, migration guides, and timelines to help users transition smoothly to newer versions.

By sticking to these principles, enterprises can update APIs efficiently while minimizing disruptions for users and ensuring smooth system integration.

Related Blog Posts

Debugging SQLite Databases in Mobile Apps

SQLite is widely used for local data storage in mobile apps due to its simplicity and offline functionality. However, debugging SQLite databases can be tricky, especially on mobile platforms like Android and iOS. Here's what you need to know:

- Why Debugging Matters: Ensures data integrity, prevents app crashes, and avoids issues like slow queries or corrupted data.

- Challenges: Limited file access, platform-specific tools, real-time data handling, and strict security policies.

- Tools: Android Studio Database Inspector, ADB commands, third-party libraries like Stetho, and desktop tools like DB Browser for SQLite.

- Best Practices: Regular database inspections, automated testing for CRUD operations and migrations, and expert support for complex issues.

Debugging SQLite databases is crucial for maintaining app performance and user trust. The right tools and methods can help you identify and fix issues efficiently, ensuring a smoother experience for your app users.

Database Inspector - Live Database Tool | Android Studio Tutorial

Tools for SQLite Database Debugging

When it comes to debugging SQLite databases, having the right tools can make all the difference. These tools generally fall into three categories: built-in utilities that are readily available during development, third-party libraries offering web or app-based interfaces, and desktop applications designed for in-depth analysis. Each category brings something unique to the table, making it easier to identify and resolve errors efficiently.

Built-In Debugging Tools

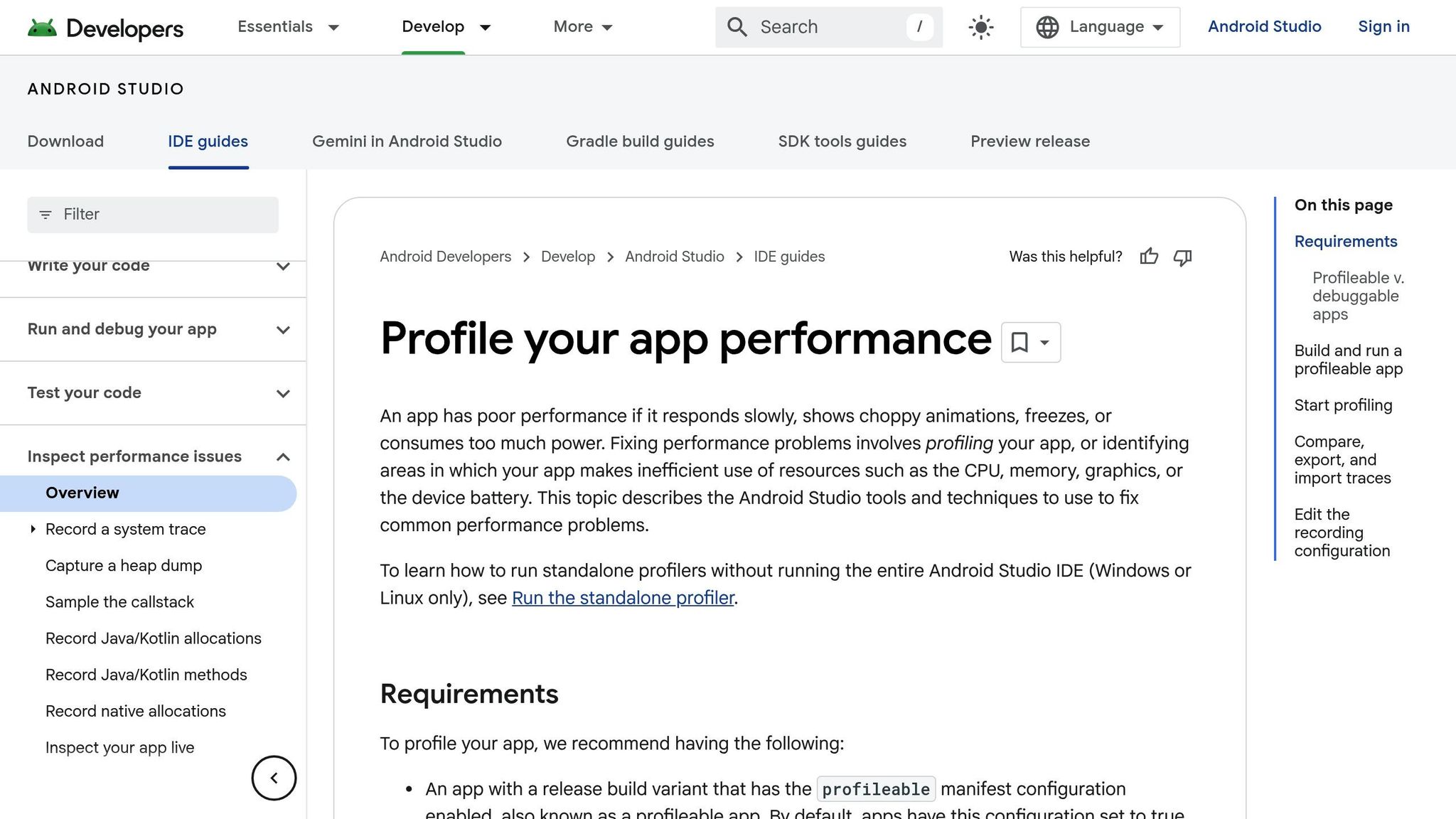

Android Studio Database Inspector is a standout tool for Android developers. It allows you to inspect SQLite databases in real-time while your app is running. You can execute Room DAO and custom SQL queries directly within the IDE, with results displayed instantly in a tabular format. If you're using Room with LiveData or Flow, the tool even updates the UI dynamically. Plus, its query history feature helps you quickly repeat common debugging tasks.

ADB (Android Debug Bridge) commands offer another powerful option for accessing database files on Android devices. By using the run-as command with your app's package name, you can navigate to the app’s private directory. The adb pull command lets you copy database files to your computer for further analysis. This method works with any debuggable app and simplifies file management for use with desktop tools.

Third-Party Debugging Libraries

Android Debug Database provides a user-friendly, web-based interface for database debugging. With this tool, you can view and edit database values, run SQL queries, and even export databases - all without needing root access. Its local server interface makes it easy to inspect your app’s data directly from a web browser.

Stetho, created by Facebook, integrates seamlessly with Chrome Developer Tools. This makes it a great choice for web developers familiar with Chrome’s debugging environment, as it brings SQLite debugging directly into the browser.

SQLScout focuses on delivering a smooth user experience for in-app database browsing and editing. Its graphical interface simplifies complex tasks and supports simultaneous interactions with multiple databases. This makes it particularly useful for apps with more intricate data structures.

These third-party libraries are especially helpful in collaborative settings, such as during QA testing, where quick checks on data integrity are often required.

Desktop Tools for Database Analysis

For more comprehensive database analysis, DB Browser for SQLite is a go-to option. This open-source application works across Windows, macOS, and Linux, offering features like a visual query builder, schema editing, and advanced data import/export capabilities. It’s perfect for handling complex queries and visualizing data.

SQLiteStudio is another excellent desktop tool, known for its flexibility and support for plugins. It ensures smooth performance across various operating systems while providing robust features for database management.

Desktop tools are particularly effective when dealing with large datasets, performing batch operations, comparing schemas, or generating detailed reports. A common workflow involves exporting database files using ADB commands, then analyzing them with these applications for a deeper dive into the data.

As highlighted by developers at Zee Palm, custom scripts and automated toolchains can further streamline debugging workflows. Armed with these tools, developers are well-prepared to tackle the direct debugging techniques discussed next.

Step-by-Step SQLite Database Debugging Methods

Following the tools and challenges previously discussed, here's a practical workflow for debugging SQLite databases. This process involves accessing database files, inspecting them in real time, and exporting them for detailed desktop analysis. These steps transition smoothly from theory to hands-on application.

Accessing Database Files on Mobile Devices

Start by copying your app's database file from internal storage to external storage. Use Android Debug Bridge (ADB) commands with the run-as command. Note that your app must be in debug mode for this to work without rooting the device.

Here’s the command structure:

adb shell 'run-as com.your.package cp databases/yourdb.db /sdcard/yourdb.db'

adb pull /sdcard/yourdb.db

Replace com.your.package with your app's actual package name and yourdb.db with your database filename. This will copy the database to an accessible location and then transfer it to your development machine.

Alternatively, stream the database content using the cat command:

adb shell 'run-as com.your.package cat databases/db-file.db > /sdcard/db-file.db'

If you're using Windows, opt for adb exec-out to avoid line-ending issues that might corrupt the database file.

Once the file is accessible, proceed to live inspection for immediate debugging.

Using Database Inspector for Live Debugging

Run your app in debug mode and open the Database Inspector in Android Studio. Navigate to View > Tool Windows > Database Inspector to access it. The tool detects your running app and lists the available databases.

The interface provides a tree view of your database structure, making it easy to browse tables. You can execute custom SQL queries directly in the query tab, with results displayed in real time. If your app uses Room with observable data types like LiveData or Flow, the app's UI will reflect changes as you modify data.

For apps using Room, you can execute DAO (Data Access Object) queries directly. The inspector identifies your DAOs and lets you test their methods with real parameters. Additionally, the query history feature allows you to quickly repeat frequently used commands, saving time during extended debugging sessions.

When you need deeper insights, export your database for desktop analysis.

Exporting Databases for Desktop Analysis

Use the ADB commands mentioned earlier to extract your database file. Open it with DB Browser for SQLite, a tool available on Windows, macOS, and Linux. This software provides features like visual query building, schema editing, and advanced data import/export options.

To open your database, go to File > Open Database in DB Browser for SQLite and select your exported file. Desktop analysis is especially useful for comparing schemas between app versions, performing bulk data operations, or generating detailed reports on database content and structure.

If your app uses multiple databases, desktop tools allow simultaneous access to all files, which is crucial for troubleshooting synchronization or migration issues.

To maintain data privacy, delete temporary copies from your device's external storage once you're done:

adb shell rm /sdcard/yourdb.db

sbb-itb-8abf120

Common SQLite Debugging Problems and Solutions

When working with SQLite, debugging can sometimes feel like solving a puzzle. Issues like permission restrictions, query errors, or handling multiple databases often crop up. Knowing how to tackle these problems can save you a lot of time and frustration.

Managing Permissions and Device Access

Accessing SQLite databases on mobile devices can be tricky, especially with Android's app sandboxing. This feature protects database files by limiting access to the app’s data directory on non-rooted devices. To navigate these restrictions, make sure your app is built in debug mode. Why? Because the adb run-as command only works when the debuggable flag is enabled in your app's manifest. Also, enable USB debugging in Developer Options to allow ADB to communicate with your device. If file system restrictions block access, consider copying files to external storage before transferring them to your computer for inspection.

Fixing Query and Syntax Errors

Once you’ve resolved access issues, the next hurdle is often SQL syntax errors. These errors are common and usually stem from typos, incorrect table names, or poorly structured SQL statements. Tools like Android Studio's Database Inspector make life easier by flagging errors and providing real-time feedback. To minimize mistakes, use the query history feature to review and refine previous queries. Start with simple queries - like a basic SELECT statement - and gradually add conditions. This step-by-step approach helps pinpoint where things go wrong. And don’t forget to double-check that your queries align with your app’s most recent schema definitions to avoid referencing outdated table or column names.

Debugging Apps with Multiple Databases

Apps using multiple SQLite databases bring their own set of challenges, from schema mismatches to data synchronization issues. When debugging these apps, clear organization is key. Android Studio's Database Inspector lets you choose the target database for your queries, so adopting consistent naming conventions for your databases is crucial. Automated tests can help ensure schema consistency across databases, while exporting databases individually allows for easier comparison and troubleshooting. Tools that highlight schema differences can save you hours of manual work. Creating up-to-date schema diagrams can also clarify each database's role and how they interact. For more advanced cases, consider using database versioning strategies to track changes over time. If things get too complicated, teams like Zee Palm specialize in providing tailored solutions to maintain both data security and performance.

Best Practices for SQLite Database Debugging

Debugging SQLite databases isn’t just about solving problems as they appear - it’s about adopting habits that help you avoid those issues altogether. Leading mobile app development teams stick to consistent practices that catch bugs early and keep databases running smoothly throughout the development process.

Regular Database Debugging

Making database inspections a regular part of your workflow can save you a lot of headaches down the road. Routine debugging helps identify problems like data corruption, inconsistent states, and performance slowdowns early in the process. If you wait until deployment to uncover these issues, fixing them becomes far more costly and time-consuming.

One of the best tools for this is Android Studio’s Database Inspector, which allows live database inspection during development. Teams that incorporate this tool into their daily workflow often see fewer production problems and can resolve issues faster when they do arise. Beyond these regular checks, automated testing adds another layer of protection for your database.

Automated Database Testing

While manual debugging is helpful, automated testing ensures a level of consistency that human efforts can’t always match. In fact, automated testing can reduce production issues by as much as 40%. This method involves creating tests that validate database operations, schema migrations, and data integrity as part of your CI/CD pipeline.

Key areas to focus on include:

- CRUD operations: Ensuring data can be created, read, updated, and deleted without issues.

- Schema migration tests: Verifying that database upgrades don’t damage existing data.

- Constraint and index validation: Confirming data integrity and maintaining performance.

- Edge case and error handling tests: Checking how your app behaves with invalid or unexpected data.

For Android apps, frameworks like JUnit integrate well with Room or SQLiteOpenHelper, allowing you to write tests that simulate database interactions. These tests run automatically with every code update, catching problems before they affect users. When even automated tests can’t resolve complex issues, turning to experts can make all the difference.

Getting Expert Development Support

Some database challenges require specialized expertise, especially when dealing with complex schema migrations, multiple databases, or performance optimization. Expert development teams bring years of experience to the table, offering tailored solutions that improve SQLite performance, optimize queries, and establish robust testing practices.

For instance, teams like Zee Palm provide comprehensive support, including automated testing services as part of their development packages. With more than a decade of industry experience, they focus on quality assurance, CI/CD best practices, and code optimization to ensure your app’s database is reliable and high-performing.

This kind of expert support is particularly valuable when database reliability is crucial to your app’s success. Not only do these professionals resolve immediate issues, but they also share knowledge that strengthens your team’s debugging skills over time. The result? Faster development cycles, fewer production problems, and a more stable app for your users. Investing in expert help can ultimately save time and resources while delivering a better product.

FAQs

What are the best tools for debugging SQLite databases in mobile apps, and how do they compare?

When it comes to debugging SQLite databases in mobile apps, some of the best tools at your disposal include Android Studio's Database Inspector, iOS's Core Data Debugger, and third-party options like DB Browser for SQLite and SQLite Expert. These tools make it easier to inspect, modify, and resolve database issues.

Each tool has its own strengths. For instance, Android Studio's Database Inspector lets you examine app databases in real time directly within the IDE, which is incredibly handy during development. On the iOS side, Core Data Debugger works seamlessly with Xcode, making it ideal for debugging SQLite databases tied to Core Data. Meanwhile, third-party tools such as DB Browser for SQLite offer a cross-platform interface packed with features like running queries and editing schemas.

The tool you choose will depend on your platform, development setup, and the specific debugging features you need.

How can I maintain data integrity and optimize performance when using SQLite in my mobile app?

To ensure data integrity and boost performance when using SQLite in your mobile app, start with a thoughtfully designed database schema. Use transactions to group operations into atomic units, which keeps your data consistent and reduces the risk of errors.

Incorporate indexes to make your queries faster, and regularly run the VACUUM command to clean up fragmentation and reclaim unused space. This keeps your database lean and efficient.

Handle concurrent access with care to avoid conflicts or data corruption, and aim to limit unnecessary database writes whenever possible. For debugging and improving performance, take advantage of tools designed to pinpoint bottlenecks and identify areas for improvement. These steps will help you create a stable and high-performing database for your app.

How can I troubleshoot common SQLite database issues on Android and iOS?

To tackle frequent SQLite database issues on Android and iOS, the first step is to look for database corruption or file access issues. Tools like Android Studio's Database Inspector or SQLite tools available for iOS can help you review the database structure and data for any irregularities.

Turn on verbose logging to pinpoint errors during database operations. This can also help you confirm that schema migrations are being applied properly, preventing compatibility problems between different app versions. Make sure to thoroughly test your SQL queries for any syntax errors and tweak them to boost performance. If the problem is particularly tricky, reaching out to developers experienced in mobile database optimization can provide valuable insights.

Related Blog Posts

Message Brokers in Middleware Integration

Message brokers are software tools that enable distributed systems to communicate efficiently by routing, queuing, and delivering messages. They are essential for middleware integration, allowing diverse applications to exchange data without direct dependencies. By supporting asynchronous communication, message brokers ensure systems function smoothly, even during high traffic or failures.

Key Takeaways:

- Two Messaging Models:

- Point-to-Point (Queue): One producer sends a message to one consumer, ideal for tasks like payment processing.

- Publish/Subscribe (Topic): One producer sends messages to multiple subscribers, suited for real-time updates like notifications.

- Applications: Used in industries like healthcare (EHR integration), IoT (device communication), and SaaS (scalable systems).

- Benefits: Scalability, fault tolerance, asynchronous operations, and reliable message delivery.

- Challenges: Complexity, debugging difficulties, potential latency, and risks of a single point of failure.

Message brokers are vital for modern architectures like microservices and cloud-native environments. They ensure flexibility in communication, enabling businesses to scale and maintain systems efficiently. For tailored solutions, expert teams can simplify implementation and maximize performance.

Demystifying Message-Oriented Middleware: Unlocking Seamless Communication

Core Models and Architecture Patterns in Message Brokers

Message brokers rely on two main models to manage message flow and fulfill business requirements. Understanding these models is key to creating middleware integration solutions that align with specific operational goals.

Point-to-Point Messaging

The point-to-point model uses a queue system where messages are sent from a single producer to one specific consumer. Producers send messages to a queue, where they remain until a consumer retrieves and processes them. Once consumed, the message is removed from the queue, ensuring it is only handled once.

This model is perfect for transactional workflows that demand reliable delivery and strict order. For instance, financial systems use this approach for payment processing, where handling duplicate transactions could lead to significant issues. Similarly, job scheduling systems rely on queues to assign tasks to individual workers, ensuring each task is completed by only one worker.

A standout feature of this model is its ability to balance workloads. By sharing the queue among multiple consumers, it distributes messages efficiently, enabling scalable processing. This is particularly helpful during high-traffic periods when extra processing capacity is needed.

Additionally, its fault-tolerance mechanisms ensure messages assigned to a failed consumer are reassigned, preventing data loss.

Publish/Subscribe Messaging

The publish/subscribe model operates on a topic-based system. Publishers send messages to specific topics, and all subscribers to those topics receive a copy of the message. This creates a one-to-many communication flow, ideal for distributing real-time information across various systems.

This model supports loose coupling - publishers don’t need to know who the subscribers are, and subscribers don’t need to know who the publishers are. This independence allows systems to evolve separately while maintaining communication. For example, if a new service needs to receive certain messages, it can simply subscribe to the relevant topic without modifying existing publishers.

This model thrives in event-driven systems where multiple services need to act simultaneously. Take an e-commerce scenario: when a customer places an order, the order service can publish an "order created" event. The inventory, shipping, and notification services can all subscribe to this topic and act accordingly, all in real time.

Stock trading platforms use this model to broadcast price updates to thousands of traders instantly. Similarly, social media platforms rely on it to send notifications to users in real time.

Integration with Modern Architectures

These messaging models play a critical role in modern architectures, especially in microservices environments, where applications are broken into smaller, independent services. Message brokers allow these services to communicate without direct dependencies, enabling teams to deploy, scale, and maintain services independently. This setup accelerates development cycles and reduces the risk of system-wide failures.

In cloud-native environments, brokers ensure stable communication as containerized services scale dynamically. This capability supports auto-scaling and fault recovery - key features in cloud operations.

For organizations transitioning to the cloud, hybrid architectures leverage brokers to bridge legacy systems with modern cloud services. By translating protocols, brokers ensure seamless integration, making them invaluable for gradual cloud migrations.

Message brokers also excel at concurrent processing, allowing multiple clients to access shared resources without conflicts. This is critical for high-traffic applications that must maintain performance while serving thousands of users.

Architecture PatternCommunication ModelPrimary BenefitBest Use CasePoint-to-PointOne-to-one (queue)Ensures single processingPayment systems, task distributionPublish/SubscribeOne-to-many (topic)Enables real-time updatesEvent notifications, data streamingHub-and-SpokeCentralized brokerSimplifies managementSmall to medium deploymentsMessage BusDistributed brokersSupports scalabilityLarge-scale, geographically dispersed systems

These models provide the foundation for creating flexible architectures that meet a variety of integration needs. They are essential for building high-performance applications capable of adapting to the demands of digital transformation.

For organizations tackling complex integration challenges, expert guidance can make all the difference. Teams like Zee Palm (https://zeepalm.com) specialize in designing middleware solutions tailored to industries such as healthcare, IoT, SaaS, and blockchain. Their expertise ensures businesses can harness the full potential of these communication models.

Pros and Cons of Message Brokers

Message brokers are powerful tools for managing communication in distributed systems, but they come with their own set of challenges. Understanding the benefits and limitations can help teams decide when and how to use them effectively.

Benefits of Message Brokers

One of the biggest advantages of message brokers is scalability. They allow producers and consumers to grow independently, which means you can add more message publishers without worrying about overwhelming the consumers. This is especially useful during traffic spikes or when scaling up your system, as workloads can be distributed across multiple consumers to handle the load efficiently.

Another key benefit is fault tolerance. If a consumer goes offline - whether due to maintenance, failure, or overload - the message broker can temporarily store messages in a queue. This ensures that no data is lost and that operations can resume smoothly once the consumer is back online. Many brokers also offer automatic retries for failed messages, adding an extra layer of reliability.

Asynchronous communication is another strength. By removing the need for immediate responses, message brokers improve responsiveness on the front end. For instance, a payment processing system can accept user requests instantly while handling the actual transaction in the background. This also ties into system decoupling, which allows teams to update individual services without affecting the entire system. It speeds up development and reduces the risk of system-wide failures during updates.

Finally, reliable message delivery is critical in scenarios like financial transactions or medical data processing. With persistence mechanisms that ensure messages are stored until successfully processed, message brokers reduce the risk of data loss - even in high-stakes situations.

Challenges and Limitations

While the benefits are clear, message brokers also introduce complexities that can’t be ignored.

Added complexity is one of the main hurdles. Setting up a message broker involves more than just plugging it into your system. Teams need to configure it correctly, monitor its performance, and possibly learn new tools. This can slow down initial development and require specialized expertise.

Eventual consistency issues are another challenge, especially in distributed systems. For example, if a customer updates their address, it might take time for that information to sync across all connected systems. This delay can lead to temporary discrepancies, such as incorrect billing or order processing.

Debugging difficulties can also arise. When problems occur across multiple services and message queues, traditional debugging methods often fall short. Teams may need specialized tools to trace messages and diagnose issues, which can complicate troubleshooting.

Potential latency is another consideration. Although queuing and routing delays are usually minor, they can add up in real-time applications, potentially impacting performance. For systems that rely on instant responses, this latency could be a dealbreaker.

Lastly, single point of failure concerns are significant in centralized broker architectures. If the broker itself goes down, communication between all connected services halts. While high-availability configurations can reduce this risk, they also increase costs and complexity.

Comparison Table

Here’s a side-by-side look at the key pros and cons:

AspectAdvantagesDisadvantagesScalabilityIndependent scaling of producers and consumersRequires careful capacity planning for brokersFault ToleranceMessage buffering and retry capabilitiesBroker failure can disrupt the entire systemSystem DecouplingIndependent updates for servicesDebugging across services becomes more complexCommunicationSupports asynchronous operationsMay introduce eventual consistency issuesReliabilityEnsures message delivery through persistenceQueuing and routing may add latencyManagementCentralizes tracking and monitoringIncreases operational complexity and overheadIntegrationWorks with diverse protocols and platformsRequires specialized expertise and tools

The choice to use a message broker depends on your system’s needs. For applications handling large volumes of data, requiring fault tolerance, or operating in distributed environments, the benefits often outweigh the challenges. On the other hand, simpler systems with straightforward communication needs might find the added complexity unnecessary.

If your team is weighing these trade-offs, expert advice can make a big difference. Organizations like Zee Palm (https://zeepalm.com) specialize in middleware integration and can help tailor message broker solutions to your specific needs, minimizing complexity while maximizing benefits.

sbb-itb-8abf120

Message Broker Use Cases

Message brokers play a key role in enabling smooth communication across industries like finance, healthcare, and smart technology. By simplifying the integration of complex systems, they help create efficient and dependable operations. Let’s explore how these tools are used in real-world scenarios to enhance performance across different sectors.

Transactional Workflows and Event-Driven Pipelines

Message brokers are crucial for payment systems and e-commerce platforms, ensuring secure, reliable, and orderly message delivery. They prevent delays in one service - such as fraud detection, inventory management, or shipping - from causing disruptions to the entire operation.

By separating data producers (like IoT sensors, mobile apps, or web applications) from consumers (such as analytics engines or storage systems), message brokers enhance scalability and maintain fault-tolerant data processing. This separation ensures that critical transactions are completed, even if a specific service temporarily goes offline. These capabilities are seamlessly integrated into middleware architectures, supporting both point-to-point and publish/subscribe messaging models.

Healthcare and Medical Applications

In healthcare, message brokers facilitate the secure and efficient exchange of critical patient data. They integrate systems like Electronic Health Records (EHR), medical IoT devices, and diagnostic tools. For instance, patient vitals from devices such as heart monitors or blood pressure cuffs are routed through brokers to update EHR systems and dashboards in real time. This ensures consistent data updates, compliance with healthcare standards, and timely decision-making.

AI-powered diagnostic tools also benefit from these data streams by analyzing patient information for predictive alerts and automated insights. This allows healthcare providers to make quick, informed decisions, whether in traditional clinical settings or during telemedicine consultations. By enabling real-time updates, message brokers are transforming healthcare workflows and improving patient outcomes.

IoT and Smart Technology Solutions

In the world of IoT, message brokers act as intermediaries between edge devices and central systems, ensuring reliable, asynchronous communication. For example, in smart home setups, devices like thermostats, security cameras, lighting systems, and door locks communicate through brokers to coordinate their operations seamlessly.

In industrial automation, brokers handle tasks like aggregating sensor data, triggering alerts, and coordinating machine actions. This enables predictive maintenance and optimizes processes. These systems have proven scalability, with some implementations consolidating data from over 150 sources to support both real-time and batch analytics.

To make the most of these technologies, expert guidance is essential. Teams with specialized knowledge in fields like healthcare, IoT, and AI can design integration patterns that maximize the benefits of message broker architectures while keeping complexity in check. At Zee Palm (https://zeepalm.com), we specialize in building scalable, secure, and interoperable middleware solutions that help businesses run more efficiently.

Best Practices for Message Broker Implementation

Getting the most out of a message broker requires a focus on data integrity, security, and scalability. These factors are the backbone of successful middleware integration, ensuring your system performs well even under demanding conditions. Below, we’ll dive into the key practices that help create reliable, secure, and scalable message workflows.

Data Consistency and Accuracy

Maintaining accurate and consistent data across systems is critical. To achieve this, message validation and schema enforcement should be in place. These steps ensure that every application interprets incoming data correctly. Additionally, techniques like idempotent message processing and deduplication help prevent errors such as duplicate entries, while transactional queues ensure operations are completed fully or rolled back if something goes wrong.

For workflows like financial transactions, exactly-once delivery guarantees are non-negotiable. Imagine a payment system where duplicate charges occur due to network retries - this is precisely the kind of problem exactly-once delivery prevents. Brokers with this capability ensure accurate account balances and avoid customer dissatisfaction.

To maintain atomicity across multiple services, especially in distributed systems, protocols like two-phase commit can be invaluable. These protocols help ensure that all parts of a transaction succeed or fail together, even in the face of network disruptions.

When integrating legacy systems with modern platforms, auto-schema mapping and built-in transformations are game-changers. For example, in healthcare, brokers can automatically convert HL7 messages into FHIR format, bridging the gap between older hospital systems and newer cloud-based applications. This ensures data integrity while modernizing operations.

Security and Compliance Requirements

Once data accuracy is addressed, securing your message flows becomes the next priority. End-to-end encryption and strong authentication mechanisms are must-haves, particularly for sensitive data. Using TLS encryption and encrypted queues ensures secure communication channels, which is especially crucial in industries where data breaches can lead to hefty fines and legal troubles.

Role-based access control (RBAC) adds another layer of security, limiting access to message queues based on user roles. For instance, in a healthcare setting, only licensed medical professionals might have access to patient data streams, while administrative staff would be restricted to scheduling or billing information.

For industries like healthcare or finance, compliance with regulations such as HIPAA or GDPR is essential. This means encrypted data transmission, detailed audit logs, and traceable messages. For example, HIPAA mandates that every message containing patient data must be logged from origin to destination, with records of who accessed what and when. GDPR adds the requirement for secure data handling and the ability to delete personal information upon request.

Additional safeguards like network segmentation and continuous monitoring help detect and respond to threats proactively. Isolating broker traffic from general network traffic and monitoring for suspicious activity ensures that potential issues are flagged before they escalate.

Scalability and Reliability Optimization

As your business grows, your message broker infrastructure should grow with it. Horizontal scaling is key here. Brokers that support load balancing across nodes can handle increasing message volumes without bottlenecks. Apache Kafka, for instance, uses partitions and replication to manage high throughput while ensuring fault tolerance through automated failover systems.

To avoid data loss during node failures, message persistence is essential. Configuring brokers to save messages to disk ensures that critical data remains intact even during hardware or network outages. This is particularly important in IoT scenarios, where sensor data may be collected sporadically and must be queued reliably for processing.

Monitoring is another critical component of scalability. Real-time monitoring tools like Prometheus and Grafana provide visibility into metrics such as queue length, message latency, and delivery success rates. Automated alerts can notify your team of issues like message backlogs or failures, allowing for quick resolution.

Finally, concurrent processing capabilities allow brokers to handle multiple message streams simultaneously. This is especially useful in environments like e-commerce, where tasks such as inventory updates, payment processing, and shipping notifications need to run in parallel without delays.